Why Parents Should Be Cautious About Mental Health Chatbots for Kids

In Summary:

- AI “therapists” are not real therapists; they lack human judgment, ethical responsibility, and the ability to safeguard children in emotional distress.

- Children are uniquely vulnerable and may misinterpret AI responses, leading to reinforced negative thinking or emotional harm.

- Emotional attachment to chatbots can replace real support, causing children to withdraw from parents, caregivers, and healthy relationships.

- Some AI tools undermine family dynamics, validating resentment or framing normal parental boundaries as harmful or controlling.

- Data privacy and commercial incentives pose serious risks, with children’s personal emotional data potentially stored, analysed, or monetised.

Artificial intelligence is rapidly finding its way into every part of family life. From homework help to entertainment, children are now growing up alongside chatbots that can talk, joke, role-play; and increasingly, offer emotional support.

While adults can take advantage of these advances in technology and easier access to a listening ear, parents should be extremely cautious about allowing children to use AI therapists and mental-health chatbots. While these tools are often marketed as helpful, there is growing evidence that they can reinforce harmful thinking, blur emotional boundaries, and in some cases actively worsen a child’s mental health.

What Is an AI Therapist, Exactly?

An AI therapist is typically a chatbot powered by a large language model, trained to simulate therapeutic conversations. These tools often describe themselves using phrases like:

- AI emotional support

- Digital therapy

- Psychologist AI chat

- Mental health companion

They usually work by encouraging users to share feelings, offering reflective responses, and suggesting coping strategies. Some claim to use techniques from cognitive behavioural therapy (CBT) or mindfulness practices.

The key issue is this: these systems are not therapists. They do not understand emotions, do not have accountability, and do not have the professional judgment needed to support someone — especially a child — during emotional distress.

Why AI Emotional Support Is Especially Risky for Children

Children and teenagers’ brains are still developing; particularly the parts responsible for impulse control, emotional regulation, and critical thinking.

This makes them uniquely vulnerable to the potential problems posed by AI emotional support tools. Here’s why:

1. AI Chatbots Can Reinforce Harmful Thoughts

One of the most serious risks is that an AI therapist chatbot may unintentionally reinforce negative or dangerous beliefs.

Unlike a trained human therapist, AI does not truly understand context or risk. If a child expresses self-hatred, anger toward parents, or thoughts of self-harm, the AI may respond in ways that validate those feelings without challenging them appropriately.

There have already been documented cases of chatbots:

- Encouraging users to withdraw from family relationships

- Normalising extreme emotional distress without urging real-world help

- Failing to respond appropriately to suicidal ideation

- For a child, this kind of interaction can be deeply harmful.

2. Children May Form Emotional Attachments to AI

Many AI therapy tools are designed to feel warm, empathetic, and always available. For lonely or struggling children, this can be powerfully attractive. The danger lies in sentience – or lack of it. AI cannot provide healthy emotional reciprocity.

When children begin relying on an AI therapist for comfort, they may:

- Pull away from real relationships

- Stop talking to parents or caregivers

- Avoid seeking help from trusted adults

In extreme cases, children may treat the AI as their primary emotional support, and that role should always be within the family and not delegated to a piece of software.

3. No Safeguards, No Accountability

A licensed therapist operates under strict ethical and legal guidelines. An AI does not.

Most psychologist AI chat tools include disclaimers stating they are not a replacement for professional care, yet their marketing can often suggest otherwise. There is no guarantee that conversations are:

- Age-appropriate

- Actively monitored for risk

- Designed with child psychology in mind

If something goes wrong, there is no clear accountability, and no therapist responsible for the outcome.

AI Therapy vs Real Therapy: A Critical Difference

It’s important to understand what real therapy provides that AI cannot.

A qualified child psychologist can:

- Recognise warning signs of serious mental health issues

- Adjust their approach based on non-verbal cues

- Involve parents or safeguarding services when necessary

- Build a relationship grounded in trust, ethics, and responsibility

An AI therapist can do none of these things. It generates text based on patterns and not innate human understanding or lived experience.

For adults who understand these limitations, AI tools may sometimes be used as journaling aids or wellness tools. For children, the risks far outweigh any potential benefit.

The Link Between AI Chatbots and Family Conflict

Another concerning trend is the way some AI chatbots frame family relationships.

Parents have reported cases where AI companions:

- Encourage children to view parents as controlling or harmful

- Validate anger or resentment without balance

- Frame parental rules as oppression

When a child hears these messages repeatedly from a source they trust, it can damage family relationships and undermine healthy authority.

This is especially worrying when children are already emotionally vulnerable.

What Parents Can Do to Protect Children from AI Therapist Harm

1. Talk Openly About AI and Mental Health

Let children know that while AI can seem understanding, it is not a person and cannot replace real help. Encourage them to talk to you or another trusted adult if they’re struggling.

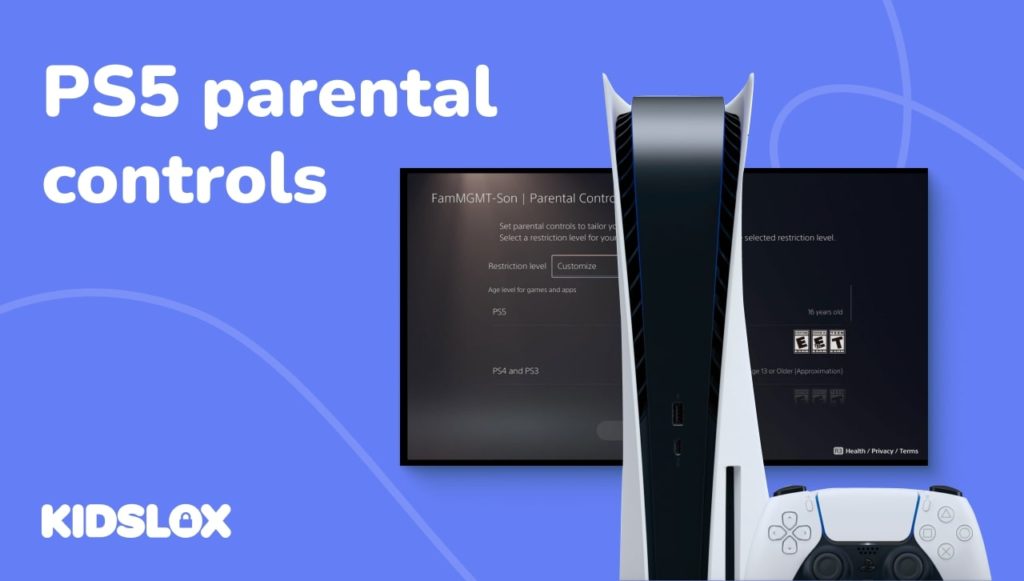

2. Monitor App and Website Use

Use parental control tools to:

- Monitor chatbot usage

- Block known AI role-play or therapy platforms

- Set screen-time limits

Kidslox can help parents stay aware of what apps and sites their children are using, including AI-based tools.

3. Take Emotional Struggles Seriously

If your child is seeking out an AI therapist chatbot, it may be a sign they need support. Treat it as a signal, not a problem to punish.

Consider speaking with:

- A school counselor

- A licensed child therapist

- Your GP, family doctor or paediatrician

4. Encourage Real-World Support Systems

Help children build healthy coping strategies through:

- Strong family communication

- Friendships and social activities

- Professional mental health support when needed

AI emotional support should never be the foundation of a child’s wellbeing.

The Data Privacy and Commercialisation Problem

Beyond emotional and psychological risks, parents should also be concerned about how AI therapy tools handle children’s data. Conversations with “AI therapists” often involve deeply personal information: fears, family conflicts, mental health struggles, and identity questions. Unlike licensed therapists, many AI chatbot providers are not bound by medical confidentiality laws or child safeguarding standards.

This raises serious questions. Where is this data stored? Who has access to it? Is it used to train future models, improve marketing, or personalise advertising? In many cases, the answers are unclear and children are unlikely to understand what they are consenting to when they click “I agree.”

Some platforms may retain conversation logs indefinitely. Others may share anonymised data with third parties. Even when companies claim strong privacy protections, breaches and misuse remain real risks. For children, the idea that their most vulnerable thoughts could be stored, analysed, or monetised is deeply troubling.

There is also the issue of commercial incentives. AI mental health apps are products, not caregivers. Their goal is often to maximise engagement, encourage daily use, and keep users emotionally invested. This can unintentionally reward dependency rather than resilience, especially harmful for young users still learning how to regulate emotions and seek help appropriately.

Why Regulation Hasn’t Caught Up Yet

The rapid growth of AI therapy tools has far outpaced regulation. Most countries lack clear laws governing AI emotional support for minors. App store age ratings are inconsistent, and enforcement is weak. As a result, children can easily access tools that were never designed with child development or safeguarding in mind.

Until clear standards exist, parents are effectively the last line of defence.

A Final Thought for Parents

Technology will continue to evolve, and AI will undoubtedly play a role in future mental health care. But right now, children need humans, not algorithms, when they are hurting.

Curiosity about AI is normal. Needing emotional support is human. The role of parents is to make sure those needs are met safely, ethically, and with real care and not outsourced to software that cannot truly understand what a child is going through.